Is cloud native infrastructure dead?

Cloud-native infrastructure, once a hot topic in the tech world, introduced advanced technologies such as containerization, microservices, and automated deployment, significantly improving the scalability and resilience of applications. However, in recent years, we seem to hear less about technologies like Kubernetes, Service Mesh, and Serverless, with AI, GPT, large models, and generative AI taking the spotlight instead. Does this mean the cloud-native infrastructure trend has passed? Has cloud-native infrastructure lost its appeal? This article explores the glorious history, current state, challenges, and future trends of cloud-native infrastructure from multiple angles.

1 The Glorious Past of Cloud-Native Infrastructure

Several key milestones mark the leap in technology within the development of cloud-native infrastructure:

2013: Docker was released, leading the shift from virtual machines to containers.

2014: Kubernetes was launched, setting the standard for container orchestration.

2015: CNCF was established, providing organizational support for cloud-native technologies.

2017: Kubernetes became the de facto standard in container orchestration, and cloud-native technology experienced rapid growth.

2018: Kubernetes graduated from CNCF, and Istio 1.0 was released.

2019: Cloud-native technology reached its peak. CNCF graduated eight projects and added 173 new members (a 50% growth). Numerous open-source cloud-native projects emerged, such as Alibaba’s OAM, Huawei’s Volcano, and Microsoft’s Dapr. The market witnessed a boom in cloud-native companies, and the cloud-native industry thrived.

By 2020, due to the pandemic, KubeCon was canceled, but in 2021, Knative 1.0 was released, and cloud providers heavily promoted serverless technologies.

2 The Current Industry Landscape

2.1 The Rise of AI and Cloud-Native’s Adoption of AI

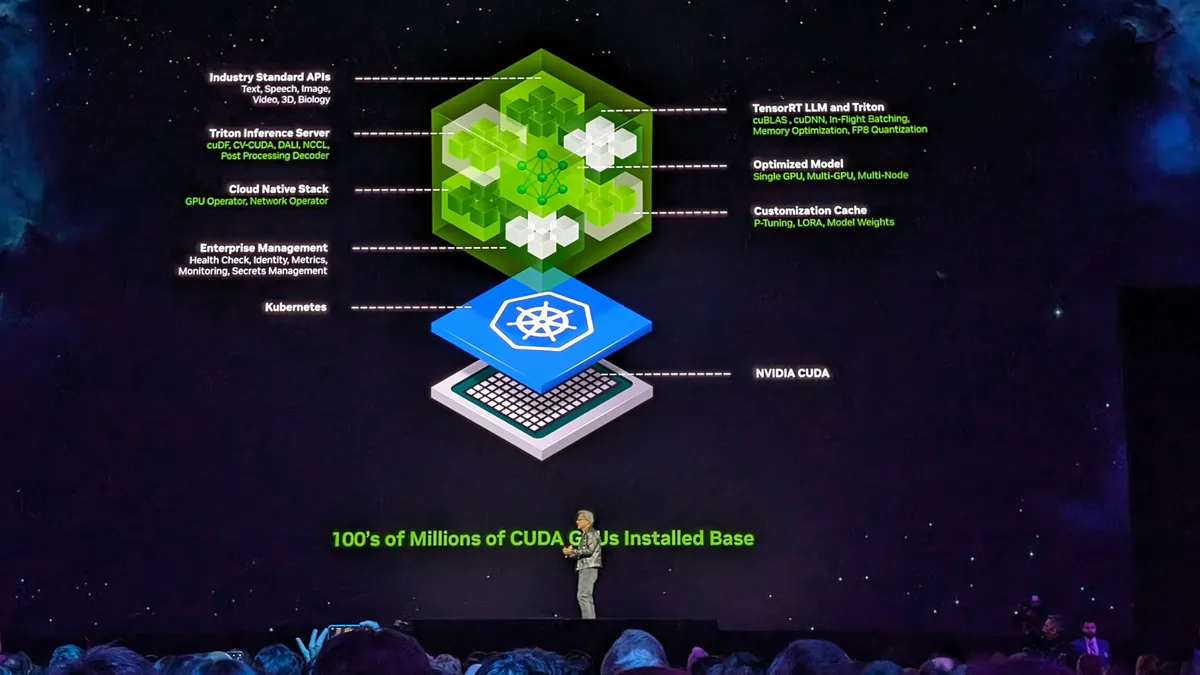

With the growing prominence of ChatGPT and large AI models, AI has taken center stage in the tech field. Although these large models are run on Kubernetes, more attention is now being paid to the capabilities of the models and their application scenarios rather than the management of underlying infrastructure. The focus on managing foundational technologies has thus diminished.

KubeCon has shifted towards AI as well. For instance, at KubeCon China 2024, at least 40 sessions were dedicated to AI, primarily focusing on how to better run large AI models on Kubernetes.

2.2 Startups and Funding

In recent years, there have been fewer new cloud-native infrastructure startups (most new startups now require an AI angle). After 2021, fundraising news has also become rare (the last significant funding event was PinCAP’s Series E round in July 2021). Many domestic cloud-native companies have pivoted towards other markets or turned their attention to international markets, such as PinCAP.

2.3 Acquisitions and Closures

- October 31, 2020: SUSE completed its acquisition of Rancher Lab.

- January 15, 2021: Banzai Cloud was acquired by Cisco.

- April 2021: Caicloud was acquired by ByteDance.

- December 21, 2023: Cilium’s parent company, Isovalent, announced it was acquired by Cisco.

- February 5, 2024: The cloud-native star startup Weaveworks announced its closure.

- July 17, 2024: GitLab is exploring the possibility of an outright sale.

These events highlight shifts in the market and how companies are adapting.

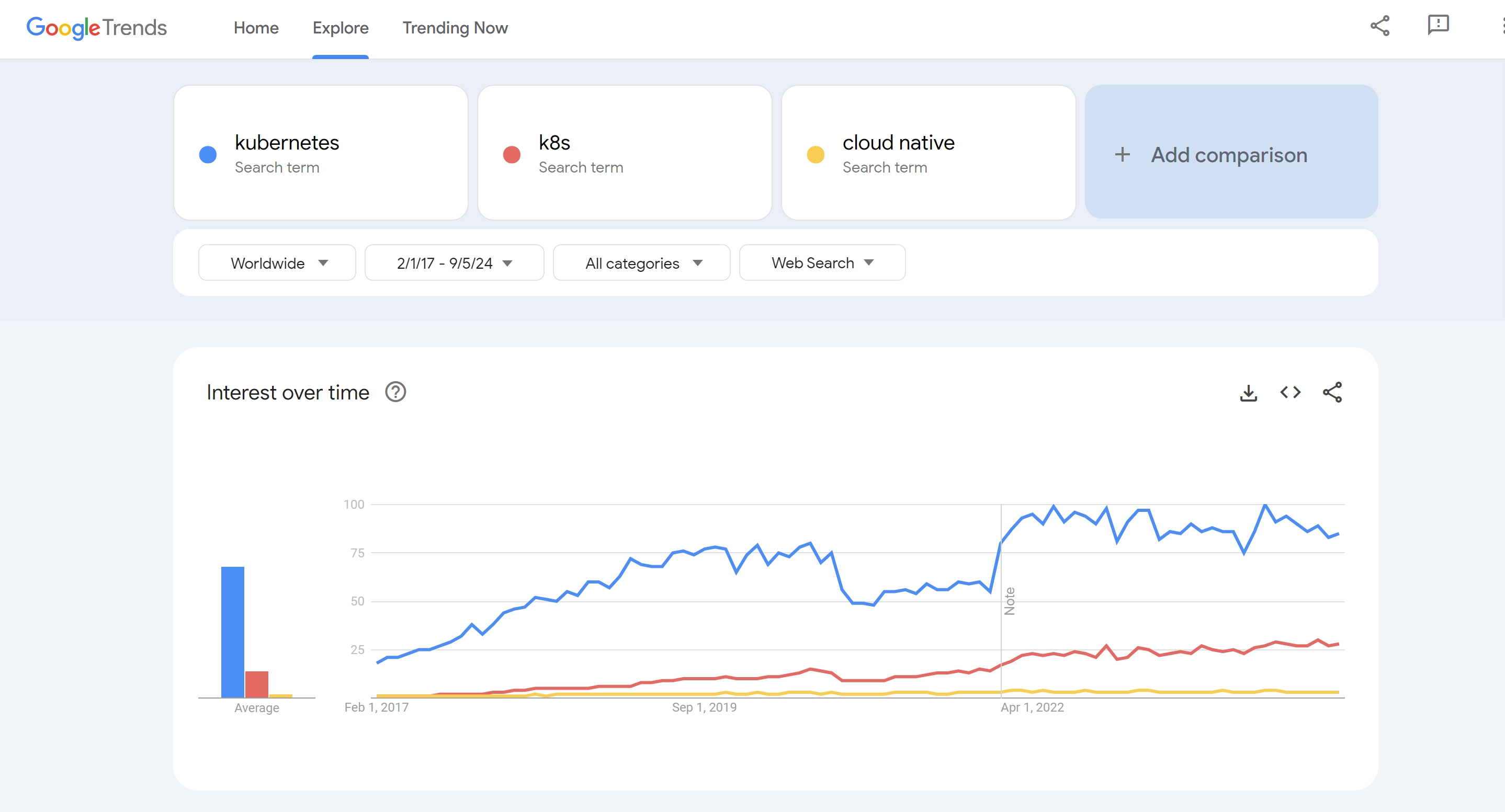

2.4 Google Kubernetes Search Trends

Google Trends shows a fluctuation in Kubernetes’ popularity. Interest rose steadily from 2017 to 2020, then began to decline between September 2020 and December 2021. From January 2022 onwards, the trend remained stable, though a downward trajectory emerged by April 2024.

2.5 Job Market

The job market reflects the reduced demand for cloud-native technologies, with fewer available roles and more competition. A search for Kubernetes-related positions two months ago on job portals shows the same listings, with only a few new ones appearing sporadically. Layoffs across many companies in recent years have intensified the competition, leading to a “more applicants than positions” situation.

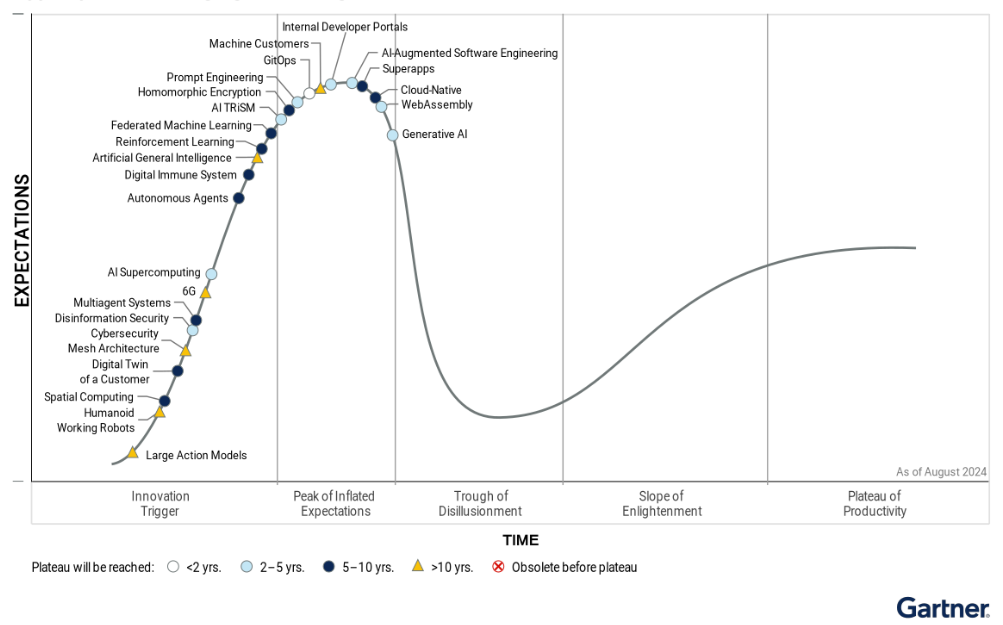

2.6 Hype Cycle

According to Gartner’s 2024 “Hype Cycle for Emerging Technologies” report, cloud-native infrastructure is now in the “Peak of Inflated Expectations” phase—indicating the technology’s hype has started to decline.

3 Community and Open Source Project Health

The open-source community has been a cornerstone of cloud-native technology development, with the Kubernetes community being the most active, serving as the core of cloud-native infrastructure.

3.1 Community Activity

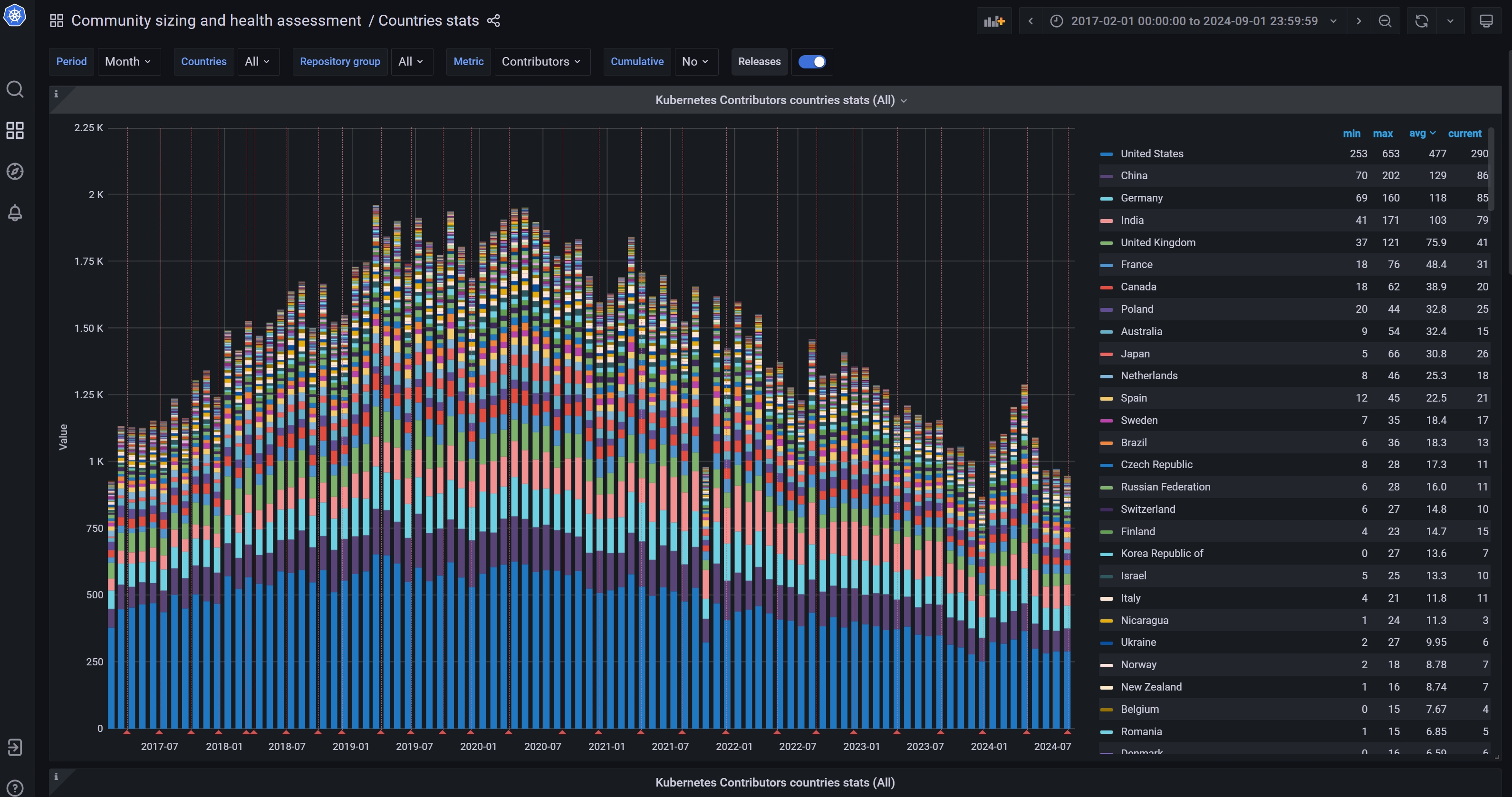

As Kubernetes has matured, its community’s innovation has slowed down. The number of contributors peaked between 2019 and 2020 but began to decline afterward. Although there was a slight rebound in the first quarter of 2024, the overall trend suggests a decrease in community innovation.

3.2 Commercial Support

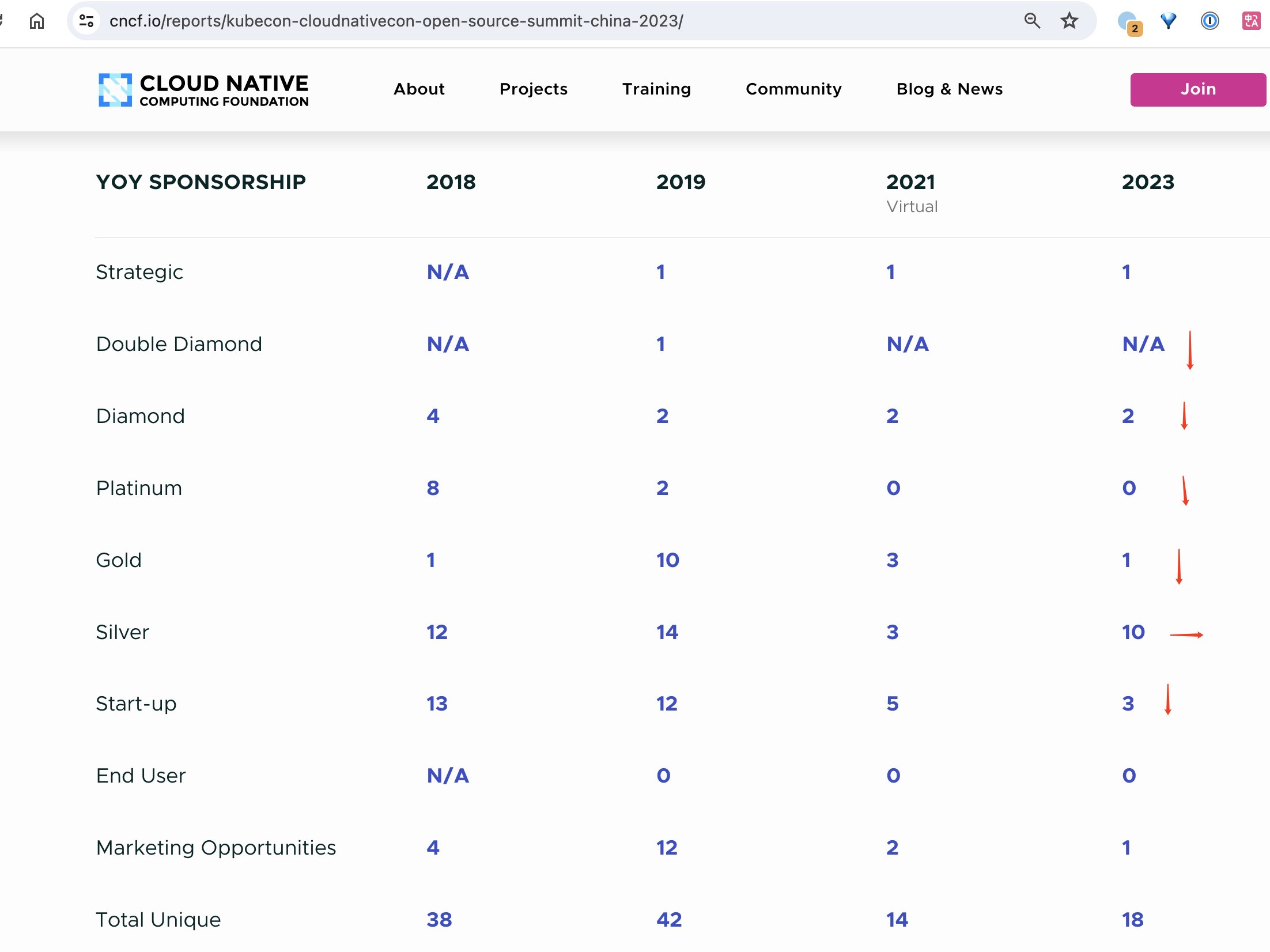

The healthy development of open-source communities relies heavily on commercial support. The status of sponsors often reflects the health of an open-source project. The decline in the number of sponsors at KubeCon, along with a drop in the number of high-level sponsors, indicates weakened commercial support, which has impacted the promotion and development of projects.

The chart below shows the sponsorship situation for KubeCon China over the years.

KubeCon China 2024 Sponsorship: There is 1 Diamond sponsor, 1 Platinum sponsor, 1 Gold sponsor, and 13 Silver sponsors.

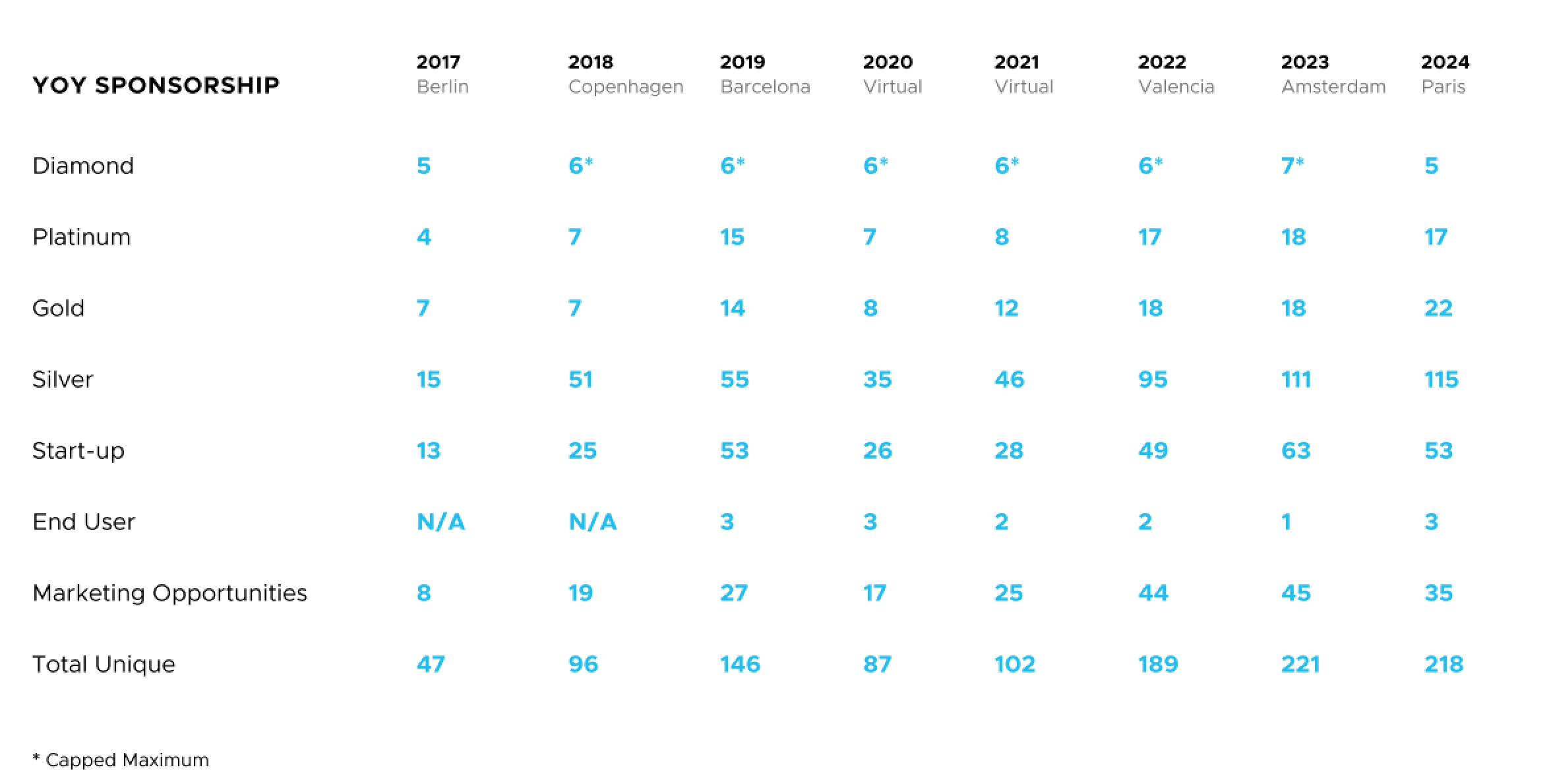

KubeCon Europe 2024 had significantly more sponsors than China, and the overall number has been growing. However, the number of high-tier sponsors has decreased compared to 2023.

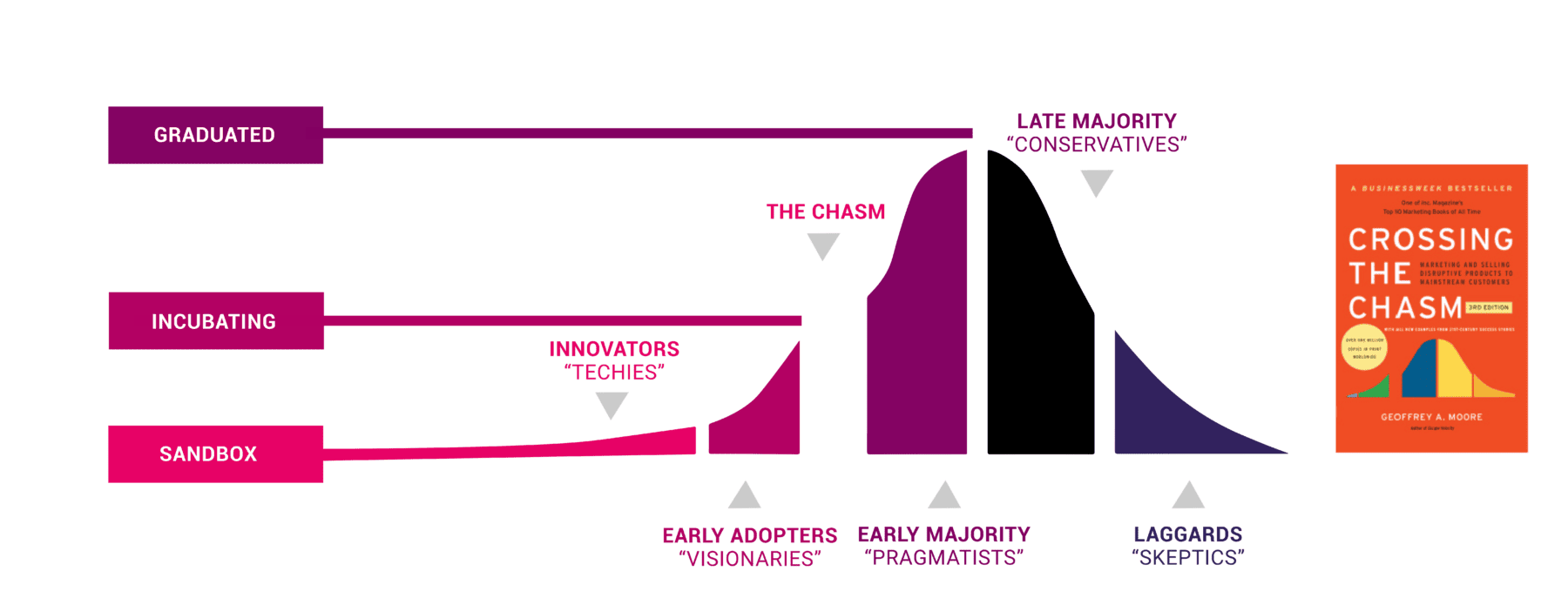

3.3 Kubernetes Project Maturity

According to the CNCF Project Maturity Levels, Kubernetes is currently in the late majority phase of maturity—referred to as the “conservatives” phase, signaling a post-peak decline.

4 Why This Is Happening

What are the reasons behind the gradual decline of cloud-native infrastructure, and why is it no longer the focus of attention?

4.1 Emergence of New Technologies

Since the release of ChatGPT, AI large model technologies have become the center of attention. Major tech companies have launched their own large models, while AI startups continue to emerge, attracting significant investment from capital markets.

Although these large AI models run on Kubernetes, people are less concerned with how Kubernetes manages these models or GPUs. Only a few companies have the capability to work with these large models, so the focus has shifted to the capabilities of the models themselves and their application scenarios.

The buzz surrounding AI large models has diverted attention from cloud-native technologies. While these models rely on Kubernetes, the focus has shifted to the models themselves rather than the underlying infrastructure.

4.2 Cost Issues

The complexity of cloud-native technologies has increased infrastructure maintenance costs. In the current economic downturn, where companies are looking to reduce costs and improve efficiency, investments in cloud-native infrastructure have decreased.

Although cloud-native technologies offer flexibility and scalability, the use of microservice architecture, containerization, Kubernetes, and service mesh has increased the complexity of application development, deployment, and management. This has led to higher maintenance costs, requiring companies to hire a large number of specialized personnel for upkeep. For applications that rely heavily on microservices and containerization, the infrastructure becomes more complex. Additionally, the elasticity and scalability of cloud-native architectures can lead to unpredictable costs, especially when resources are poorly managed.

In times of economic downturn, with reduced business and traffic, cloud-native infrastructure becomes a significant cost burden, prompting companies to scale back their investments, leading to a reduction in market demand and jobs in the sector.

4.3 Technical Complexity

Cloud-native infrastructure includes a wide range of technologies, such as containerization, Kubernetes, service mesh, serverless, and observability, making it overwhelming for newcomers to learn. The steep learning curve and increasing maturity of cloud-native technologies have raised the barriers to entry. Practitioners need to cover a broad range of knowledge while also going deep in specific areas. For instance, those working on service mesh need to understand microservices, containerization, Kubernetes, observability, logging, traffic routing, sidecars, data planes, control planes, xDS, and Envoy, among others.

The steep learning curve and rising entry barriers have made it increasingly difficult for new talent to enter the field of cloud-native infrastructure.

4.4 Competition and Market Saturation

Cloud providers have become increasingly mature in offering cloud-native infrastructure, lowering the barrier for companies to adopt these technologies. For instance, serverless and edge computing have led some companies to adopt these simpler, more direct solutions, avoiding the complexity of managing infrastructure. Serverless, in particular, has attracted developers who prefer not to manage infrastructure, offering simplified architectures and greater agility. Smaller companies don’t need cloud-native infrastructure specialists, leaving space only for large enterprises.

With the rapid development of cloud providers in recent years, offering better technology at lower costs, they’ve built a deep moat. Coupled with the economic downturn, companies have reduced their investments in infrastructure, leading to decreased demand for cloud-native infrastructure and a saturated market. As a result, there have been few new cloud-native startups or financing news in this field in recent years.

5 The Future of Cloud-Native Infrastructure

The future of cloud-native infrastructure is filled with uncertainties, but it is far from fading away. Kubernetes remains the platform on which modern applications run, including today’s AI large models, which also operate on Kubernetes.

5.1 The Role of Kubernetes

Despite the challenges, Kubernetes will continue to serve as the foundational platform for many applications. Technological innovation will keep driving the evolution of cloud-native infrastructure. As AI and machine learning become more prevalent, cloud-native infrastructure will evolve to support larger-scale computing and data processing. For instance, the latest Kubernetes version 1.31 introduced support for large model OCI read-only volumes. Kubernetes remains the backbone for running all types of applications, whether it’s online services, offline tasks, model inference, or machine learning.

5.2 Shift in Capital Focus

With changes in the economic landscape, capital is increasingly focusing on fields that can generate direct returns. The investment enthusiasm for cloud-native infrastructure may decline, but the foundational role of the technology remains unchanged.

From a capital perspective, the complexity of cloud-native infrastructure has driven up the cost of building it (requiring companies to hire a large number of specialized, highly-paid professionals). In the current economic downturn, capital is more interested in investments that yield immediate returns, and since infrastructure doesn’t directly reflect on output, it might be one of the first areas to see budget cuts. This could lead to a shrinking market for cloud-native infrastructure, with fewer startups in this space.

5.3 Changes in Workforce

The number of professionals working in this field may decrease, and community activity may dwindle. Only large enterprises will be capable of handling infrastructure at scale. In the context of cost-cutting and efficiency improvements, many professionals, like myself, who have been laid off may struggle to find jobs. Even though AI model training on Kubernetes might create some new roles, only a few large companies can truly work with these models, meaning the overall increase in jobs will be limited.

As technology matures, cloud-native infrastructure may become simpler and easier to use, much like Linux has over time. It could evolve into something more foundational, where most people only need to understand its basic principles and usage, with systems like Sealos positioning themselves as cloud-native operating systems. Although the number of professionals in the field may decline, the essential nature of the technology will persist, and new roles and opportunities may emerge.

6 Conclusion

The buzz around cloud-native infrastructure is gradually fading, with a noticeable decline in community activity and market demand. The rise of AI models, especially ChatGPT, has diverted attention from Kubernetes and its related technologies, leading to reduced investment and fewer startups in this space. At the same time, economic downturns and cost-cutting measures have led companies to reduce their investments in cloud-native infrastructure. The increasing technical complexity has also raised the barrier for new entrants.

From a capital perspective, cloud-native infrastructure has cooled off. Newcomers should be cautious about entering this field due to its steep learning curve, dwindling job opportunities, and high expectations.

However, Kubernetes will continue to play a critical role as the foundation for many applications, particularly in AI and large-scale computing. In the future, more simplified and user-friendly solutions may emerge to replace complex cloud-native architectures. For both professionals and companies, understanding and adapting to these changes will be crucial for future success.

7 Reference

https://developer.nvidia.com/nim

KubeCon + CloudNativeCon Europe 2024 Conference Transparency

Kubernetes Contributors countries stats (All)

KubeCon + CloudNativeCon + Open Source Summit + AI_dev China 2024