A CNI 'Chicken-and-Egg' Dilemma: How Does Calico Assign IPs to Itself?

While research CNI recently, I recalled an interesting issue I encountered during the development of network plugins and investigation of Calico: Calico assigns IP addresses to its own components’ Pods (e.g., calico-kube-controllers). How does Calico achieve this? From the installation of the Calico network plugin to assigning IPs to its own Pods, what happens at the underlying level?

This essentially poses a “chicken-and-egg” problem: running a Pod requires the CNI plugin, while the CNI plugin’s operation depends on the proper functioning of other Pods.

This analysis is based on Cilium v1.16.5, Calico v3.29.1, and Kubernetes v1.23.

1 Prerequisite Knowledge

When a node does not have a CNI plugin installed, the Node will remain in the NotReady state and will have the following taints:

node.kubernetes.io/network-unavailable(Effect: NoSchedule)node.kubernetes.io/not-ready(Effect: NoExecute)

The current v0.x and v1.x versions of the CNI specification require a binary file (usually located in /opt/cni/bin) and a configuration file (usually located under /etc/cni/net.d as *.conf or *.conflist). Cilium and Calico’s Agent and Operator are custom extensions.

The network modes for Cilium and Calico Pods in different configurations are as follows:

| CNI | Agent | cilium-operator / calico-kube-controllers | Typha (Calico only) |

|---|---|---|---|

| calico + kubernetes mode | host-network | pod network | |

| calico + kubernetes mode + typha | host-network | pod network | host-network |

| calico + etcd mode | host-network | host-network | |

| cilium | host-network | host-network |

Using calico + kubernetes mode as an example, let’s analyze what happens at the underlying level after Calico CNI installation.

2 kubelet Starts calico-node

DaemonSet Pods can tolerate the node.kubernetes.io/network-unavailable and node.kubernetes.io/not-ready taints, allowing calico-node Pods to be scheduled onto NotReady nodes.

To resolve the circular dependency issue (kubelet requires the network plugin to start Pods, while the network plugin requires Pods to run), kubelet has a mechanism: when a node is in a network not ready state, only hostNetwork mode Pods are allowed to run. This means kubelet can run hostNetwork mode Pods even without a CNI plugin installed.

Since calico-node runs in hostNetwork mode, kubelet starts calico-node first.

Relevant source code: kubelet.go

// If the network plugin is not ready and the pod is not host network, return an error

if err := kl.runtimeState.networkErrors(); err != nil && !kubecontainer.IsHostNetworkPod(pod) {

kl.recorder.Eventf(pod, v1.EventTypeWarning, events.NetworkNotReady, "%s: %v", NetworkNotReadyErrorMsg, err)

return false, fmt.Errorf("%s: %v", NetworkNotReadyErrorMsg, err)

}3 calico-node Installs CNI Binary and Configuration Files

calico-node includes an initContainer specifically for installing the CNI binary and configuration files.

Configuration reference: calico-node.yaml

# This container installs the CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: {{.Values.cni.image}}:{{ .Values.version }}

imagePullPolicy: {{.Values.imagePullPolicy}}

command: ["/opt/cni/bin/install"]CRI detects CNI readiness by checking for the CNI binary and configuration files. Once kubelet detects that the CNI plugin is ready, the node’s status is updated to Ready.

When the node becomes Ready, the Node Lifecycle Controller in controller-manager removes the taints.

4 calico-node Initializes IP Pool

When calico-node starts, it creates a default IP Pool, which is essential for IP allocation by CNI.

Startup command reference: rc.local

# Run the startup initialization script.

calico-node -startup || exit 1calico-node creates the default IP Pool based on the CALICO_IPV4POOL_CIDR, CALICO_IPV6POOL_CIDR environment variables, and the kubeadm-config ConfigMap.

Relevant source code: startup.go

// Configure IP Pool

configureIPPools(ctx, cli, kubeadmConfig)5 calico-ipam Allocates IP

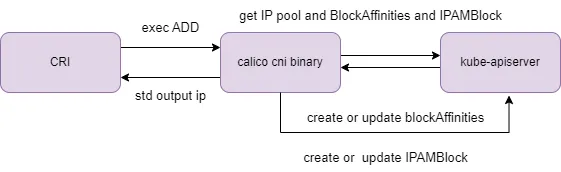

When the CRI executes the calico-ipam binary (upon receiving a Pod IP request), it queries the apiserver for IPPool and BlockAffinities. Based on these resources, it determines available IP blocks and assigns IPs, updating the IPAMBlock CRD to track IP usage.

Notably, calico-ipam does not directly depend on calico-node or other Calico components, allowing it to function independently even before calico-kube-controllers start.

Relevant source code: ipam_plugin.go

6 Summary

The “chicken-and-egg” problem of CNI is resolved through two mechanisms:

- Allowing

hostNetworkmode Pods to run onNotReadynodes. - Ensuring CNI binaries can operate independently.

After installing Calico CNI, the sequence is as follows:

- kubelet starts the

hostNetworkcalico-node Pod. - calico-node’s initContainer installs the CNI.

- The node transitions to

Ready. - calico-node creates a default IP Pool.

- calico-ipam independently assigns IPs for calico-kube-controllers Pod.

- All Calico Pods start successfully.

This article focuses on IP allocation in the CNI specification and does not cover details such as container network interfaces, IPAM block maintenance, or WorkloadEndpoint management.

With years of expertise in Kubernetes, I offer consultations for troubleshooting, source code interpretation, and answering Kubernetes-related questions. Click here to contact me.