Koordinator Descheduler: LowNodeLoad Plugin Enhancing Node Balance and Application Stability

The article A Deep Dive into HighNodeUtilization and LowNodeUtilization Plugins with Descheduler discusses two Node utilization plugins of the descheduler in the Kubernetes community. Both of these plugins use requests to calculate the resource usage of nodes, which cannot address the problem of node overheating (where a small number of nodes have higher resource usage than the majority of other nodes). However, this article introduces the LowNodeLoad plugin of koordinator descheduler, which solves this problem. It distinguishes between high-watermark nodes, normal nodes, and low-watermark nodes based on the actual resource usage of nodes.

The koordinator descheduler is compatible with the community descheduler while adding two plugins, MigrationController and LowNodeLoad (added in Koordinator v1.1).

The LowNodeLoad plugin of koordinator is similar to the lowNodeUtilization plugin in that it evicts pods from high-watermark nodes to low-watermark nodes. However, unlike lowNodeUtilization, it classifies nodes based on their actual load, effectively addressing the issue of node resource overheating.

The MigrationController plugin provides resource reservation and arbitration mechanisms (interception mechanisms) to ensure application stability when pods are evicted by descheduler.

This article only introduces the LowNodeLoad plugin, while the MigrationController plugin will be discussed in subsequent articles. This article is based on Koordinator v1.4 and Descheduler v0.28.1.

1 Execution Principle

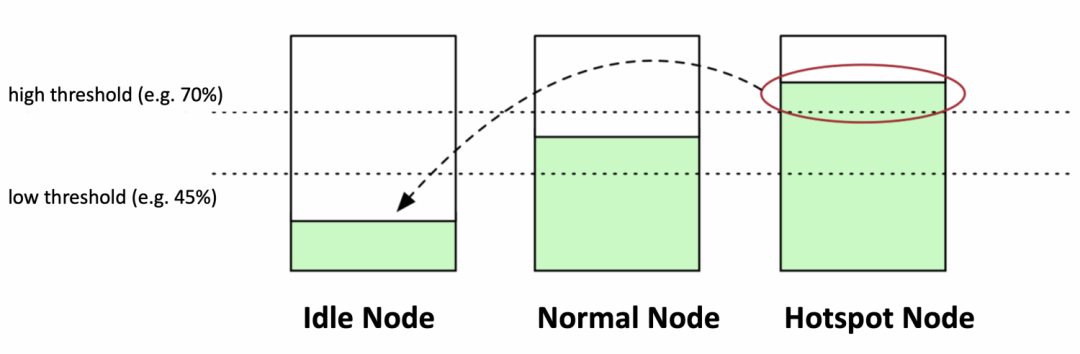

Similar to lowNodeUtilization, this plugin has two thresholds: the high watermark and the low watermark. If the utilization of all types of resources in a node is below the low watermark, it’s considered an Idle node. If at least one resource’s utilization is above the high watermark, it’s a Hotspot node. All other nodes are Normal nodes.

Its goal is to evict pods from Hotspot nodes to Idle nodes, thus converting Hotspot nodes into Normal nodes, achieving a balance in resource utilization across nodes.

2 Differences from the lowNodeUtilization Plugin

The LowNodeLoad plugin offers several additional features compared to the lowNodeUtilization plugin, including support for node resource pools, additional filtering for Hotspot nodes to prevent eviction due to resource utilization fluctuations, setting weights for different resource types, and enhancing pod sorting algorithms.

2.1 Execution Workflow

The execution workflow is similar to the lowNodeUtilization plugin in the community descheduler, but it integrates the preEvictionFilter step into the pod filtering phase, making the entire process more logical and easier to understand.

The main steps are as follows:

- Select Nodes: Choose nodes for pod eviction.

- Order Nodes: Sort the nodes.

- Filter Pods: Identify the list of pods eligible for eviction from nodes.

- Order Pods: Sort the pods.

- Evict Pod: Execute the eviction.

2.2 Node Resource Utilization Calculation Method

The lowNodeUtilization plugin calculates node resource utilization based on the sum of requests for all pods on the node compared to the percentage of resources the node can allocate.

The LowNodeLoad plugin calculates node resource utilization based on the actual resource usage values (sum of resource usage values of all pods plus system resource usage values) compared to the percentage of resources the node can allocate.

In the select nodes step, the actual resource usage of nodes is obtained from custom resource NodeMetric corresponding to each node.

The custom resource NodeMetric is maintained and updated by the koordlet component on the node (similar to the kubelet component in Koordinator, responsible for collecting and reporting node status, adjusting pod resource allocation, and adjusting pod cgroup settings, etc.). Each koordlet on the node updates the system resource usage values and pod resource usage values to the NodeMetric resource on the node.

Thus, the LowNodeLoad plugin of koordinator descheduler relies on the koordlet component to obtain node resource usage rather than relying on metrics-server.

2.3 Support for Node Pools

There’s a common scenario where clusters divide nodes into multiple types, usually distinguished by labels. Each type of node collection is termed a node pool. For example, different business tasks may be scheduled on different resource node pools, each with its own threshold settings.

The lowNodeUtilization plugin in the community does not support node pools directly, but this requirement can be indirectly met by configuring multiple deschedulers, each with its own global nodeSelector and lowNodeUtilization settings for each node pool.

The LowNodeLoad plugin in Koordinator natively supports node resource pools, allowing configuration of NodeSelector, HighThresholds, LowThresholds, ResourceWeights, etc, for different node pools.

2.4 Filtering Nodes Requiring Eviction Based on State Mechanism

Due to the infrequent changes in node requests, the lowNodeUtilization plugin in the community categorizes nodes based on their current request utilization.

However, actual resource usage values for nodes are a more frequently changing metric. To avoid triggering pod eviction due to resource usage fluctuations, the LowNodeLoad plugin adopts a method of continuously maintaining state judgments multiple times within a fixed time period to classify nodes. Nodes are categorized into abnormal and normal states. If a node is identified as a Hotspot node multiple times consecutively within a fixed time window, it’s considered to be in an abnormal state. Conversely, if a node is identified as a Normal node multiple times consecutively within the same time window, it’s considered to be in a normal state.

During the select nodes step, the counters for abnormal state are incremented and the counters for normal state are reset to zero for Hotspot nodes. Nodes that meet the criteria for abnormal state are then filtered, and only these nodes undergo pod eviction. After eviction, when a node returns to a Normal state, the counters for abnormal and normal states are reset.

2.5 Resource Types with Weights

In the node sorting phase, the lowNodeUtilization plugin directly sorts nodes from highest to lowest based on the sum of requests for all resource types in the node.

The LowNodeLoad plugin allows setting weights for each resource type (at the resource pool level). This enables favoring certain resources to influence sorting. For instance, by assigning a higher weight to CPU resources, nodes with high CPU usage are prioritized for pod eviction. Nodes are sorted based on node scores from highest to lowest. The node score is calculated as the sum of the utilization of all resources as a permillage of allocatable, multiplied by the total weight, divided by the sum of weights. node score = sum((usage value of each resource * 1000 / allocatable of each resource) * weight of resource) / sum(weight of resource).

2.6 Pod Sorting Algorithm

The sorting algorithm of the lowNodeUtilization plugin relies solely on priority and QoS, which may not fully meet enterprise production requirements as it doesn’t consider actual resource usage of pods, pod-deletion-cost, or business importance.

The LowNodeLoad plugin sorts pods based on their resource usage, Koordinator’s PriorityClass, Priority, QoS Class, Koordinator QoSClass, pod-deletion-cost, EvictionCost, and creation time dimensions.

Specific sorting rules:

| Sorting Field | Dimension | Sorting Rules |

|---|---|---|

| 1nd Sorting Field | Koordinator PriorityClass | Sorted in the order of “koord-free”, “koord-batch”, “koord-mid”, “koord-prod”, "" (no value or empty) |

| 2rd Sorting Field | Priority | Sorted in ascending order |

| 3th Sorting Field | QoS Class | Sorted in the order of BestEffort, Burstable, Guaranteed |

| 4th Sorting Field | Koordinator QoSClass | Sorted in the order of “BE”, “LS”, “LSR”, (“LSE”, “SYSTEM”), "" (no value or empty) |

| 5th Sorting Field | Pod-deletion-cost | Sorted in ascending order |

| 6th Sorting Field | EvictionCost | Sorted in ascending order |

| 7th Sorting Field | Pod Resource Usage | 1. Pods without monitoring data are placed before pods with monitoring data 2. Among pods with monitoring data, they are sorted in descending order of pod scores, i.e., based on pod resource usage from highest to lowest Pod score calculation algorithm: sum((usage value of each resource * 1000 / allocatable resources on the node) * resource weight) / sum(resource weights) |

| 8th Sorting Field | Pod Creation Time | Pods with later creation times are placed before pods with earlier creation times |

3 Configuration Example

In the official helm chart of the koordinator descheduler, the LowNodeLoad plugin is not enabled by default.

apiVersion: descheduler/v1alpha2

clientConnection:

acceptContentTypes: ""

burst: 100

contentType: application/vnd.kubernetes.protobuf

kubeconfig: ""

qps: 50

deschedulingInterval: 10s

enableContentionProfiling: true

enableProfiling: true

healthzBindAddress: 0.0.0.0:10251

kind: DeschedulerConfiguration

leaderElection:

leaderElect: true

leaseDuration: 15s

renewDeadline: 10s

resourceLock: leases

resourceName: koord-descheduler

resourceNamespace: koordinator-system

retryPeriod: 2s

metricsBindAddress: 0.0.0.0:10251

profiles:

- name: koord-descheduler

pluginConfig:

- args:

apiVersion: descheduler/v1alpha2

arbitrationArgs:

enabled: true

interval: 500ms

defaultJobMode: ReservationFirst

defaultJobTTL: 5m0s

defaultJobTTL: 5m0s

evictBurst: 1

evictFailedBarePods: false

evictLocalStoragePods: false

evictQPS: "10"

evictSystemCriticalPods: false

evictionPolicy: Eviction

ignorePvcPods: false

kind: MigrationControllerArgs

maxConcurrentReconciles: 1

maxMigratingPerNode: 2

namespaces:

exclude:

- kube-system

- koordinator-system

objectLimiters:

workload:

duration: 5m0s

schedulerNames:

- koord-scheduler

name: MigrationController

plugins:

balance: {}

deschedule: {}

evict:

enabled:

- name: MigrationController

filter:

enabled:

- name: MigrationControllerBelow is an example of configuring the plugin separately:

profiles:

- name: koord-descheduler

pluginConfig:

- args:

apiVersion: descheduler/v1alpha2

name: LowNodeLoad

evictableNamespaces:

exclude:

- kube-system

useDeviationThresholds: false

lowThresholds:

cpu: 45

memory: 55

highThresholds:

cpu: 75

memory: 80

plugins:

balance:

enabled:

- LowNodeLoad

deschedule: {}

evict:

disabled:

- name: "*"

enabled:

- name: DefaultEvictor

filter:

disabled:

- name: "*"Enabling both the LowNodeLoad plugin and MigrationController plugin:

profiles:

- name: koord-descheduler

pluginConfig:

- args:

apiVersion: descheduler/v1alpha2

arbitrationArgs:

enabled: true

interval: 500ms

defaultJobMode: ReservationFirst

defaultJobTTL: 5m0s

defaultJobTTL: 5m0s

evictBurst: 1

evictFailedBarePods: false

evictLocalStoragePods: false

evictQPS: "10"

evictSystemCriticalPods: false

evictionPolicy: Eviction

ignorePvcPods: false

kind: MigrationControllerArgs

maxConcurrentReconciles: 1

maxMigratingPerNode: 2

namespaces:

exclude:

- kube-system

- koordinator-system

objectLimiters:

workload:

duration: 5m0s

schedulerNames:

- koord-scheduler

name: MigrationController

- name: LowNodeLoad

args:

apiVersion: descheduler/v1alpha2

kind: LowNodeLoadArgs

evictableNamespaces:

exclude:

- kube-system

useDeviationThresholds: false

lowThresholds:

cpu: 45

memory: 55

highThresholds:

cpu: 75

memory: 80

plugins:

balance:

enabled:

- LowNodeLoad

evict:

enabled:

- name: MigrationController

filter:

enabled:

- name: MigrationController4 Summary

The LowNodeLoad plugin of koordinator descheduler is not a general-purpose descheduler plugin. It relies on the koordlet within Koordinator to obtain pod and node resource usage information. Therefore, Koordinator descheduler cannot be run independently to use the LowNodeLoad plugin. The design of the node state mechanism is overly complex, with intermediate states between high utilization nodes, and the fixed window may simplify implementation by using a sliding window algorithm.

The pod sorting algorithm considers actual pod usage and the importance of pods (via Koordinator PriorityClass). It also supports pod-deletion-cost and EvictionCost (allowing manual adjustment of sorting to some extent), which is a good design, although it may not meet all enterprise requirements.

Configuration of resource types with weights is supported. When setting multiple resource types to calculate node resource usage, nodes with high usage rates of a certain resource can be prioritized for pod eviction (indicating a more urgent need to reduce node resources).

Support for node resource pool resources allows separate configuration of HighThresholds, LowThresholds, and ResourceWeights for each node resource pool, making it more suitable for the business characteristics of each resource pool.

Combining with the MigrationController plugin enables resource reservation and arbitration mechanisms. The resource reservation feature requires support from the koordinator scheduler, while the arbitration mechanism provides additional interception capabilities, such as workload eviction pod quantity limits (similar to PodDisruptionBucket).

5 Reference

Koordinator v1.1 Release: Load-awareness and Interference Detection Collection